Be cautious of small studies.

Be cautious of small studies.

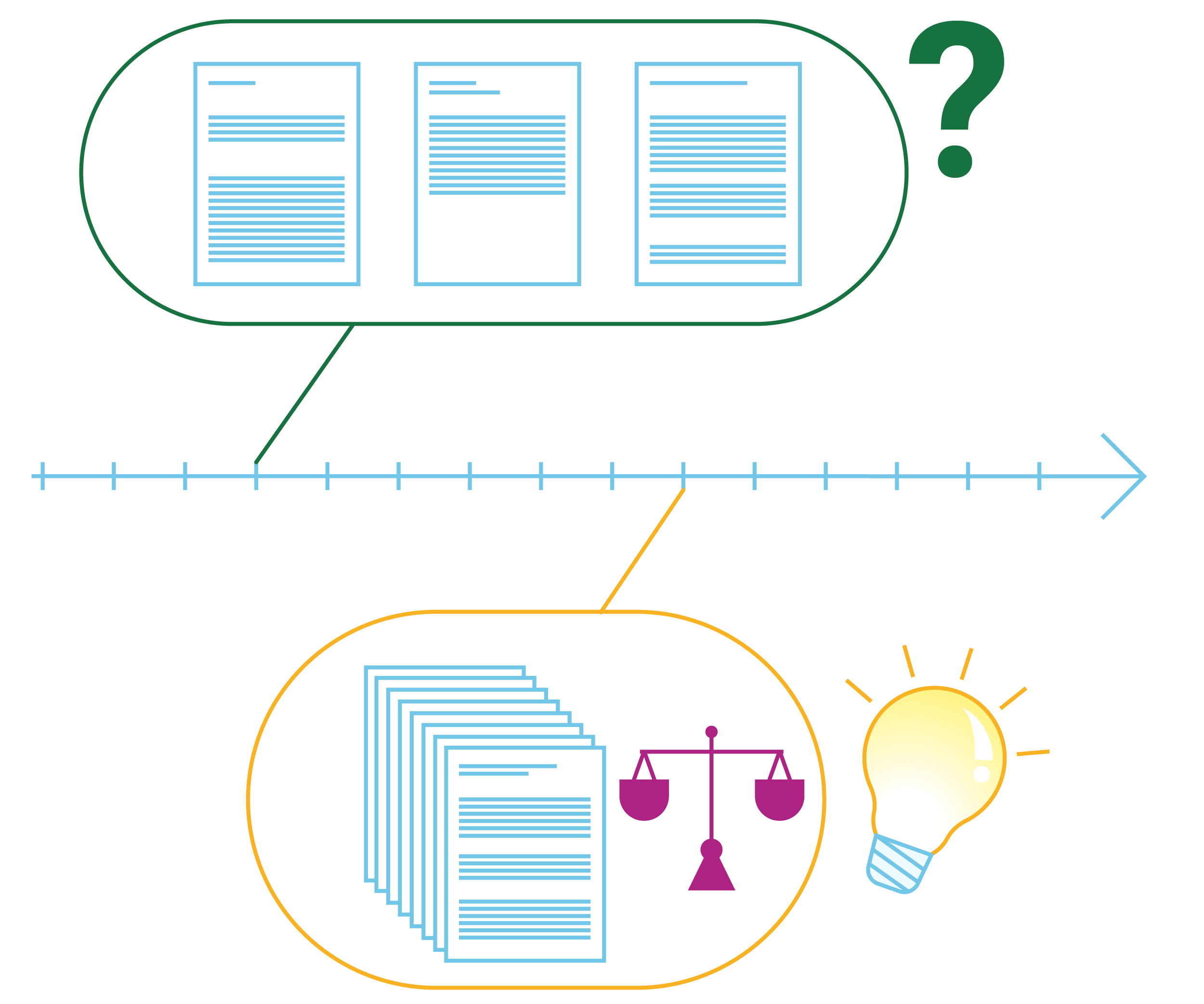

For the findings of a comparison of health actions to be reliable, the comparison groups should be large enough to be sure that the results are not happening by chance. Otherwise, the results might be misleading, and appear to be larger or smaller than the actual difference between the health actions being compared.

Explanation

To find the results of a comparison of health actions, we count the number of “events” (occurrences of the outcomes of interest) in each of the groups to see how big the difference (the effect) is. A large-enough comparison does not necessarily mean a comparison with a very large number of people. It means a comparison with enough events to be sure that the results reflect the true difference.

To understand why a comparison needs enough events, it can be helpful to think of two bags, Bag A and Bag B, each containing five red pebbles and five black pebbles. When you take a pebble out of the bag, there is an equal chance of it being red or black. In this example, an event is taking out a red pebble. Imagine that you take six pebbles out of each bag. Knowing the truth, you would expect to take out three red and three black pebbles from each bag. However, these are the results:

- From Bag A, you take 4 red pebbles and 2 black pebbles.

- From Bag B, you take 1 red pebble and 5 black pebbles.

If you do not know how many red and black pebbles there are in the bags, you might conclude that Bag A and Bag B are different, and that there are more red pebbles in Bag A than in Bag B. However, this is misleading. The truth is there is no difference between Bag A and Bag B. The results happened by chance. Because the comparison is small with a small number of events, even large differences can occur by chance. In this example, 4 out of 6 (67%) of the pebbles from Bag A were red and 1 out of 6 (17%) of the pebbles from Bag B were red. That’s a big difference (50%).

If there were 100 pebbles in each bag, and you removed 60, you would probably take out something close to 30 red pebbles from each bag. The more pebbles you take out, the closer you will get to the truth.

There are several reasons why small studies may overestimate the effects of health actions. One reason is reporting. Small studies are more likely to be published and outcomes are more likely to be reported if there appears to be a big effect. Another reason is that small studies may have a higher risk of bias due to their design and implementation compared to large studies. It is difficult to predict when or why effect estimates from small studies will differ from effect estimates from large studies with a low risk of bias or to be certain about the reasons for differences. However, systematic reviews should consider the risk of small studies being biased towards larger effects and consider potential reasons for bias.

Example

In some countries, based on the results of summaries of small studies, intravenous (IV) magnesium was given to heart attack patients to limit damage to the heart muscle, prevent serious changes in heart rhythm and reduce the risk of death. A controversy erupted in 1995, when a large well‐designed fair comparison with 58,050 participants did not demonstrate any beneficial effect of IV magnesium, contradicting the earlier summaries of smaller trials.

Remember: You can’t be sure about the reported effects of health actions that are based on small studies with few people or few events. The results of such studies can be misleading.

Primary school

- Lesson 7. Fair comparisons with many people. In: The Health Choices Book: Learning to think carefully about treatments. A health science book for primary school children.

- Animation: 7: Fair comparisons with many people – The Health Choices Book

Secondary school

- Lesson 7. Large-enough groups. In: Be smart about your health: Think critically, make smart choices.

Other

- Blog: Fair comparisons with few people or outcome events can be misleading. Students 4 Best Evidence.